- Abstractly projecting data features into higher dimensions

- Applying linear models to projections

- The Kernel Ridge Regression formula

- Identify important hyper parameters

Extracting features without getting your hands dirty

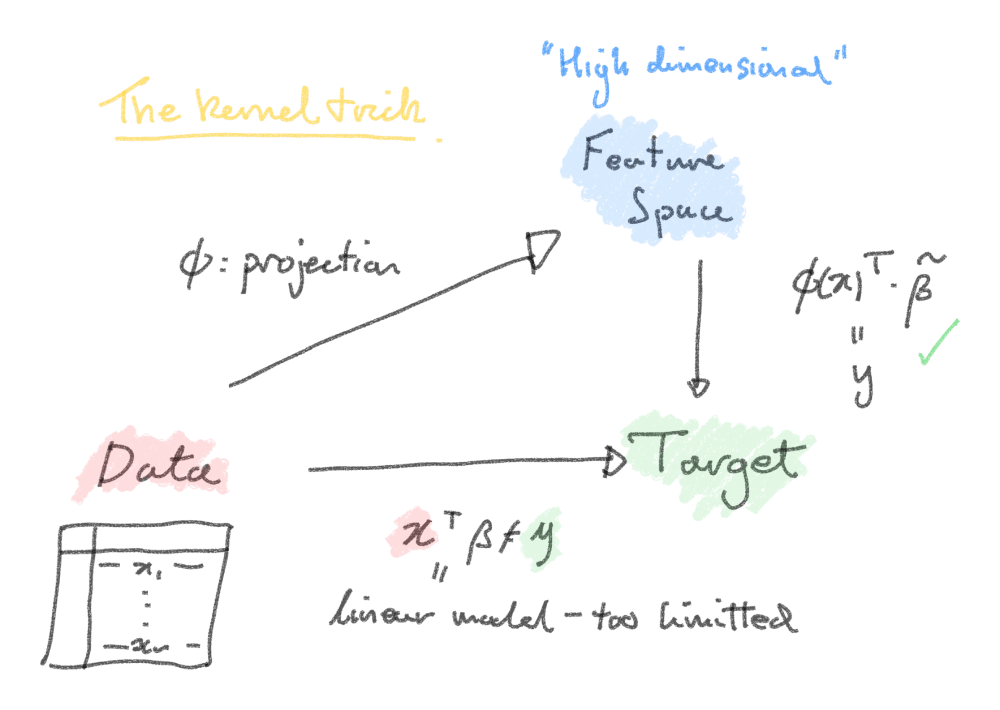

Let's consider our data problem more generally. If we have a -dimensional dataset , we can to stretch it into a much higher number of dimensions so that the problem is more easily separable. This leaves us defficit in on two accounts:

- Tractability: as the number of dimensions increases, fitting models involve learning more parameters which may require vast amounts of computational resources.

- Explainability: with large amounts of dimensions , it can be difficult to systematically decide how to project the features into even higher dimensions .

Enter: The Kernel Trick

Let's recount the solution to the least squares regression problem. But first of all, let's postulate a projection of our data into a higher dimensional space. Our linear model from before now reads as for a larger vector of parameters. The analytical solution to minimising the squares of parameters does not depend on the projected features themselves, but the products between them (written as for any two ).

The so-called kernel trick is to recognise these products as the outputs of special types of functions, called "kernels". The outputs will depend only on pairs of input data features :

Diagram to show the shortcut taken by the kernel trick.

Making careful choices of the kernel function avoids need for ever explicitly writing down , which for very large may be very inconvenient to do.

The higher projection dimension is always treated theoretically, and with the kernel trick we can evaluate models in the projected space via scalar products. In this way, we can formalise the method to treat models in infinite dimensional spaces without having to explicitly compute in these spaces.