- Have expanded your toolkit of models to include the Support Vector Machine (SVM) and Kernel Ridge Regressor (KRR) kernel machines for classification and regression tasks.

- Have developed a theoretical background in non-parametric feature extraction for building advanced machine learning models.

- Understand how and when to select non-parametric (flexible/agnostic) machine learning models over parametric (or pre-specified) models.

- Be able to interpret these models in different scenarios to explain your results and understand performance.

Machine learning comes in many flavours. In the Using General Linear Models for Machine Learning course, we have seen lots of examples of parametric machine learning models, those where we take a guess at the form of the function mapping inputs to outputs. In this course, we are going to step things up a gear by forming a toolkit of non-parametric models, here, we'll focus on Kernel Machines.

But first, let's answer a few questions:

-

What are non-parametric models? They simply give the machine learning algorithm the freedom to find the function that best fits the data. These models are naturally flexible and can be applied to different types of dataset.

-

What are kernel machines? They are derived from simple parametric models - in our case, linear models - and extended via the kernel trick to non-parametric approximators. Specifically, we take a look at the Kernel Ridge Regressor (KRR) and the Support Vector Machine (SVM), two powerful machine learning models.

As we build up our toolkit of advanced models, we shall naturally consider the following topics:

Feature extraction, non-parametric modelling, decision surface analysis and Lagrange multipliers in optimisation.

These are a few of the big concepts we'll unpack in this course. They lay the foundation for many pattern recognition techniques used by professionals in modern-day research - they also help form some of the most powerful modelling tools we have to date.

In this course, you will get to grips with:

- Non-parametric models, unlocking their advanced predictive power over parametric techniques.

- Implementing and tuning advanced machine learning techniques with detailed code walk-throughs.

- The theory underpinning the techniques used, so you have the expertise to interpret model predictions and understand the structure.

- How to quickly build a data science pipeline using the advanced models in the course.

This course follows neatly from where Tim left off in Using General Linear Models for Machine Learning In Tim’s course we covered the basic ideas around machine learning practice. In this course, we are using this foundation to start applying some advanced professional-standard tools in order to get the best results.

Section 1: Don’t Be Fooled by the Kernel Trick.

We’ll start unpacking the notion of ‘non-parametric models’ with an excellent prototype:

Projecting data features into a high-dimensional space where a linear model can solve the task.

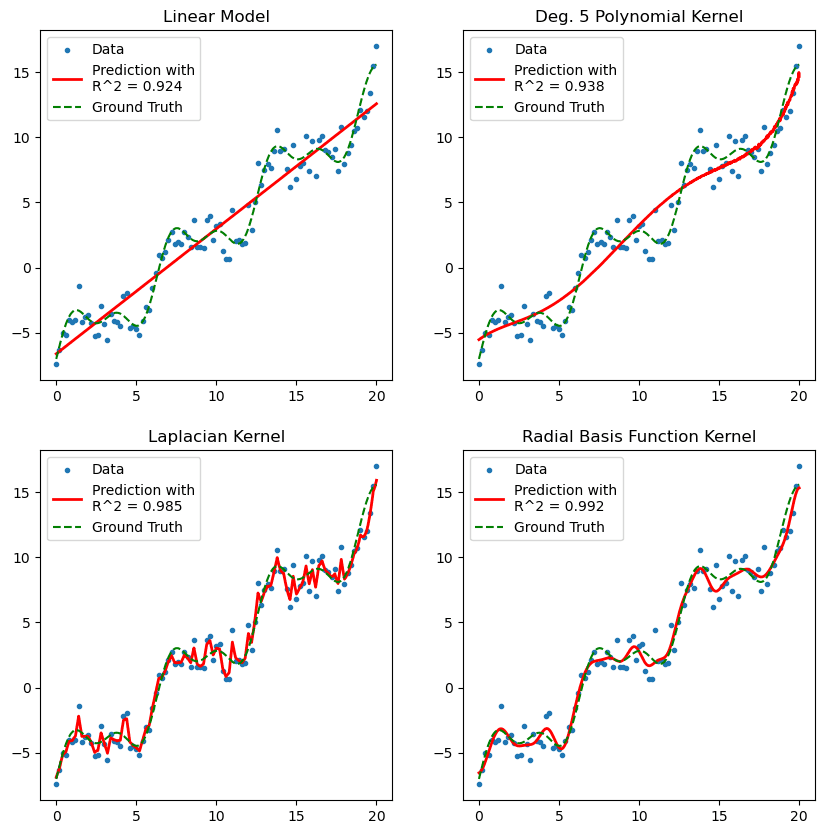

The kernel trick we play means we never have to write down the projection, nor solve for the associated high-dimensional parameter vector. The derivation starts in our comfort zone - linear regression - and cleanly delivers a non-parametric model capable of cheaply understanding difficult non-linear data.

In this section, we shall unpack this technique and inspect the various hyper-parameter and kernel functions that need to be selected for the problem at hand.

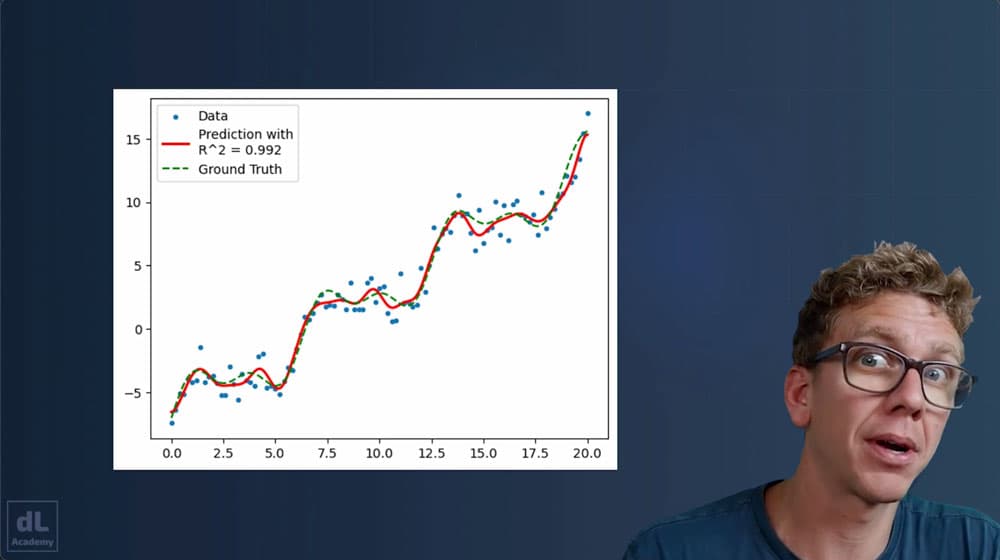

Figure 1. Relaxing a linear least-squares regression with the kernel trick allows for more general choices of predictive function.

Section 2: Support Vector Machines and They'll Support You.

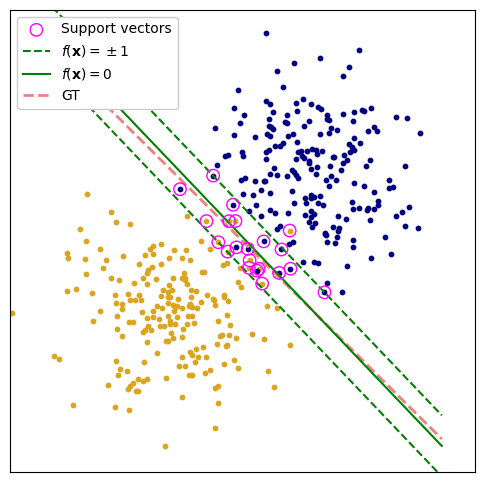

One of the most powerful predictors and the cutting-edge of machine learning, before deep learning came along, Support Vector Machines are a valuable collection of models to keep in your toolkit. They can be applied to regression and classification tasks alike.

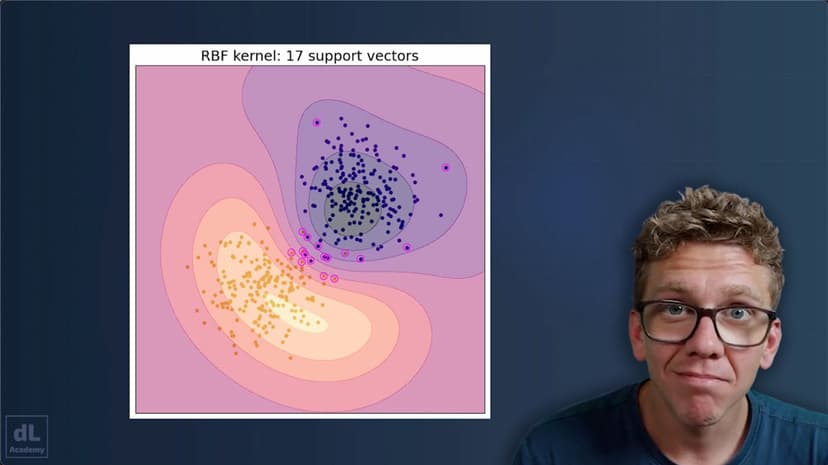

Alongside the kernel trick, these become highly flexible, non-parametric machine learning algorithms that give unique insight to the most impactful data points in your training set; these are identified as support vectors.

We'll examine support vector machines for a range of tasks and visualise how support vectors may be identified within the model. Crucially, support vector machines are able to sparsify a dataset of many samples though selecting only those necessary to generate a predictive model.

Figure 2. A linear SVM vs. a non-parametric 'kernel' SVM for predicting decision boundaries.

So, if you're ready to take your machine learning to the next level, let's get started!

🤖 Use AI to help you learn!

All digiLab Academy subscribers have access to an embedded AI tutor! This is great for...

- Helping to clarify concepts and ideas that you don't fully understand after completing a lesson.

- Explaining the code and algorithms covered during a lesson in more detail.

- Generating additional examples of whatever is covered in a lesson.

- Getting immediate feedback and support around the clock...when your course tutor is asleep!