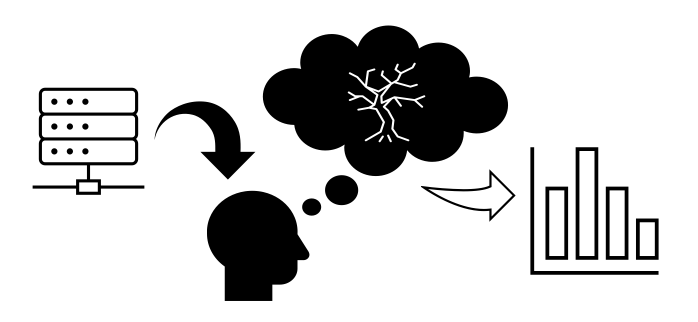

In this course we shall climb the heights of a fundamental machine learning concept: the decision tree. As a root stock for some of the most effective algorithms on the market, we take a look at the decision tree from the ground up. Applicable to both classification and regression problems, these basic tools are useful for interrogating the non-parametric decisions they produce.

We shall cover:

- Heuristic demonstration behind decision tree logic.

- Tree terminology: roots, leaves and splitting.

- The machine learning approach.

- Simple examples: classification and regression.